About "glitchesarelikewildanimals!"

Karin + Shane Denson

Part of the larger Scannable Images project, the virtual installation glitchesarelikewildanimals! consists of several static and animated images, along with video content and generative text superimposed upon them when viewed through the Wikitude AR browser (see here for a brief "user's guide" to the project).

Apart from the augmented reality components, the base layer of the installation consists of two visible objects:

1) An animated background (.gif) derived from a video experiment: "Evie Metabolic Images ^9". One morning our dog Evie, still a puppy, slept peacefully on the floor, curled up in a patch of sunlight with her famous blue crocodile. We filmed her with an iPhone, which caught nicely the subtle motion of her breathing. In order to bring animal and digital metabolisms into closer contact, the resulting video was uploaded to Facebook, and the same iPhone was again used to film the computer screen as it was displayed. This "second generation" video, too, was uploaded to Facebook and again filmed, producing a "third generation" video. The process was repeated over and over until we reached the ninth generation. All nine videos were then synchronized and composited in a single frame. This video, a little over a minute in length, was then subjected to a process of "databending" — we opened it in Audacity, an audio-editing program (and hence "the wrong tool" for the job) and exported back into video, a process that degraded the digital video information and hence produced a variety of glitches (which had to be "captured" in a stable video format). The resulting video was then transformed into the looping animated gif you see here.

2) A foreground image with four panels, which serves as a trigger for the installation's AR components. The four panels are:

a) A digital photograph of (who else?) Evie the dog — the original "wild animal" referenced in the title "glitchesarelikewildanimals!" This image, taken with an iPhone 4S, served as the initial basis for the augmented generative audio-video overlay that forms the core of glitchesarelikewildanimals! (see below for more info).

b) The text that inspired the title of this installation. This phrase, "glitches are like wild animals," derives from an online tutorial on databending, the author of which explains: "For some reasons (cause players work in different ways) you'll get sometimes differents results while opening your glitched file into VLC or MPC etc... so If you like what you get into VLC and not what you see in MPC, then export it again directly from VLC for example, which will give a solid video file of what you saw in it, and if VLC can open it but crash while re-exporting it in a solid file, don't hesitate to use video capture program like FRAPS to record what VLC is showing, because sometimes, capturing a glitch in clean file can be seen as the main part of the job cause glitches are like wild animals in a certain way, you can see them, but putting them into a clean video file structure is a mess."

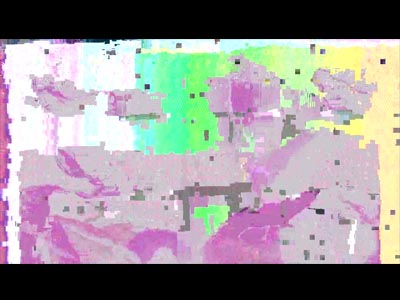

c) A video still from a databent video derived from the image of Evie the dog (described above). To make the video, the iPhone photo was opened in a text editor and manipulated extensively to produce visible glitches. Then, while recording the computer screen with screen capture software, the glitching process was reversed, step for step, producing a video documenting the photo's transformation from an extremely glitched form back to its initial state. This video was fed into the audio program Audacity and exported back out, producing another layer of glitch and degradation. The resulting video was synced with generative audio, itself the process of "translating" the iPhone photo into sound. Two versions were produced, utilizing different codecs. The two video versions are played in sync in the AR overlay (see below). (More information about the production process involved can be found here: "The Glitch as Propaedeutic to a Materialist Theory of Post-Cinematic Affect".)

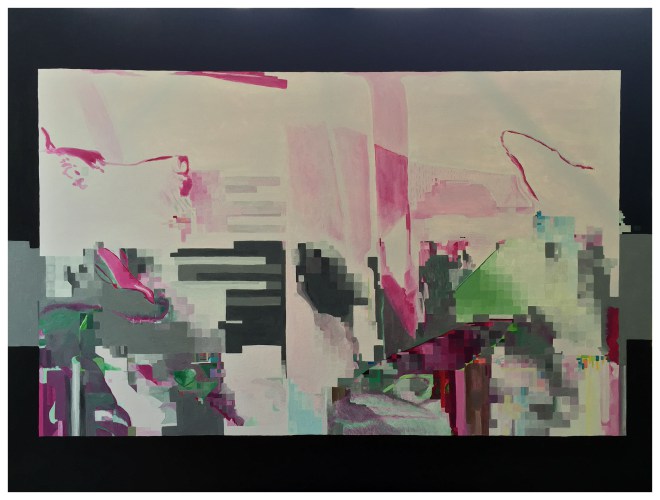

d) Finally, a photo of a large canvas, 48" x 36", hand-painted by Karin Denson to depict a video still from the other version (codec) of the same video. Painting the image in physical media represents another means of "capturing" the glitch-qua-wild-animal, one that explores the interface between the imperceptible microtemporality of digital image processing (the image flashes on the screen faster than it can be perceived) and its transformation into a perceivable form. Moreover, the painting presents a meditation on the boundary and interaction between contemporary forms of virtuality and materiality, especially when the physical painting of a digital object/process is overlaid with an augmented layer that exists somewhere in between.

When scanned with the AR browser Wikitude (user's guide here), several additional components become visible (and audible):

A) The databent video that forms the core of "glitchesarelikewildanimals!" As described above, the video is presented in two different versions, based on two processes of databending transformation (and two video codecs utilized in the process). The audio of each version is panned to the left or the right, corresponding to the videos' placement on the screen. It is best listened to with headphones, as the two channels slowly go out of sync over the course of the video, adding another layer to the installation.

B) An HTML overlay displaying a glitched version of Evie's photo and randomly selected sentences from our project statement "Scannable Images: Materialities of Post-Cinema after Video," which can be read in full on the project's HOME page.

C) Another HTML overlay, which uses Markov chains to generate new sentences on the basis of "Crazy Cameras, Discorrelated Images, and the Post-Perceptual Mediation of Post-Cinematic Affect," a text by Shane Denson that theorizes the phenomenological and affective dynamics of post-cinematic imaging technologies. The associative logic of the text-generating algorithm formally mimics the logic of contiguity and promiscuous interconnection by which post-cinema operates, and according to which it distinguishes itself from the human-centered perceptual logic of classical cinema. And it is these dynamics that we hope to capture and mediate — both thematically and materially — to the viewer of "glitchesarelikewildanimals!" and the larger project of "Scannable Images."

Click here to return to glitchesarelikewildanimals!